The Five Billion Dollar MVP

First published November 16th, 2024. Edited September 26th, 2025

Introduction

There is a “standard model” of how to start a company that’s emerged over the last few decades:

- Build a “minimum viable product” (MVP) as quickly as possible.

- Launch the MVP as soon as it’s done and try to get people to use it and pay for it.

- Aggressively collect customer feedback and iteratively improve the product based on that feedback.

- Look for “product-market fit.” If and only if the company finds it, scale and grow revenue from there.

The aim of this approach is to expose the startup to the realities of the market as quickly as possible. The company gets immediate feedback on whether they understand their customers and whether their product is any good. They avoid the trap, which is endemic with engineers, of spending lots of time building a product without first validating that they’re building the right thing. This approach gets the startup moving with very little capital. The company limits risk by trying to find product-market fit while it is still small and burn is low. And once the startup does decide to raise significant capital, they will have data showing whether the business is working. The best articulation of this philosophy is in “The Lean Startup,” by Eric Ries, and in the work of Steve Blank. By this point, it has thoroughly saturated entrepreneurial culture: the pilot episode of HBO’s Silicon Valley was titled “Minimum Viable Product.”

However, there is a particular type of project where it is structurally impossible to use the standard model. The minimum viable product cannot be built and launched quickly - instead, it requires large amounts of capital upfront, before anyone can ever use it. Something about the nature of the technology makes incremental development impossible and instead requires years of work upfront. The bar for a “viable” product is something that is capable of producing value for its users. For this sort of technology, the smallest version that can create value is large enough to be a challenge. Difficult MVPs are common in industries which are oriented towards the physical world: in aerospace, pharmaceuticals, robotics, semiconductors, energy, and others.

I am particularly interested in products where the MVP costs five billion dollars or more. Even in hard industries, there are very few products where the cost to get to market is so extraordinarily high. My first job out of college was at a company that tried to build a five billion dollar MVP, and we basically failed. OneWeb raised $3.4 billion from Softbank and other investors in order to build a low Earth orbit satellite internet constellation. We needed several billion more to finish the system and start providing internet service, but in 2020 Softbank declined to invest any more capital. So OneWeb went bankrupt. Not many people have the opportunity to watch $3.4 billion get incinerated at their first job. It was an odd and fascinating introduction to capitalism, and the experience motivated me to think through why we raised that capital and why we didn’t get the job done.

There are very few technologies where a five billion dollar MVP is necessary, but those technologies have the potential to be transformational. $5-10 billion is just about the most a corporation has ever spent on a novel project that’s not within an existing business line. Industry is only willing to take risks that large when the potential reward is correspondingly large. The price of a project only gets that high when the scale required is large, the engineering challenge is extreme, the safety requirements are stringent, and there is no way to divide the project into something smaller and more manageable. These projects are extremely difficult to pull off. OneWeb was not the first startup to burn billions of dollars on a five billion dollar MVP, and it won’t be the last. I’m going to step through three examples: satellite internet constellations, nuclear fusion, and supersonic passenger aviation.

LEO Satellites

A picture of a batch of Starlink satellites on the dispenser, attached to the second stage of a Falcon 9 just before deployment. The dispenser is a long, rectangular rack; the view of the camera is from one end, showing two columns and many rows of satellites. Image credit: CC0

In that first job at OneWeb, we were building a low Earth orbit (LEO) satellite internet constellation. Most satellite bandwidth has in the past been delivered by satellites in geostationary orbit (GEO). This is the altitude at which a satellite orbits the earth once every 24 hours, so a satellite in GEO appears stationary in the sky from the perspective of someone on the ground. As a result, a single satellite can provide constant coverage of an area, and the customer can use an inexpensive parabolic antenna that is permanently pointed at the satellite (the typical “satellite dish”). The issue with GEO, though, is that it’s 35,800 km1 away, three times the earth’s radius. This is far enough that there is a four second light-speed delay while the signal makes the trip from Earth up to the satellite and back down to the destination. So the major drawback of geostationary satellites is that any real-time communication that uses them is obnoxiously laggy. If, instead, the satellites are in a low Earth orbit, between about 400 and 1200 km2 altitude, this lag is eliminated. But a satellite in LEO moves quickly across the sky from the perspective of someone on the ground, causing two serious problems. First, the antenna on the ground needs to be capable of following the spacecraft’s movement. I’m not going to dwell on it here, but creating an antenna that can track satellites while remaining cheap enough for a consumer to buy has been one of the major projects of the antenna industry over the last decade. The big problem for us is that the customer can only see a low orbiting satellite for about four minutes before it moves below the horizon. So a new satellite must rise into view above the horizon every four minutes, or else the network connection will drop. This means the satellites must cover the entire planet to provide constant coverage anywhere, or else the roving holes in coverage will cause periodic network dropouts3. So the number of satellites needed to do LEO satellite internet, and the number of rockets needed to launch them, is comically large.

This scale is why the minimum viable low Earth orbit satellite communication system costs five billion dollars. All those rockets and satellites need to be paid for before revenue starts coming in. We have four solid examples of this sort of system, all of which cost at least five billion dollars. Starlink, built by SpaceX, is by far the best known. At time of writing in fall 2024, SpaceX is halfway through deploying the second generation Starlink system and has launched 7,148 satellites across 203 rocket launches4. Gwynne Shotwell, the COO of SpaceX, estimated in 2018 that Starlink would require “about $10 billion or more to deploy the system” (Source). Unfortunately, SpaceX is private so I don’t have a better estimate of the total system cost, but it certainly ended up being on the scale that Shotwell estimated. OneWeb actually had a second act and completed its constellation after getting purchased out of bankruptcy5. The company raised $3.4 billion prior to declaring bankruptcy in 2020, then in 2021 it declared it was “fully funded” after raising an additional $2.4 billion post-bankruptcy, for a total of $5.8 billion. There are two more examples of LEO satellite systems from the 90s, though for phone communications rather than internet. Iridium and Globalstar both raised several billion dollars, launched satellites in the 90s, and then went bankrupt6. These systems were much smaller, 48 satellites plus spares for Globalstar and 66 satellites plus spares for Iridium. But satellites and rockets used to cost more, so Globalstar raised $4 billion up to its bankruptcy, and Iridium raised $5 billion7. We’ve got four examples across three decades of new LEO satellite communications systems that cost at least $5 billion.

Fusion Power

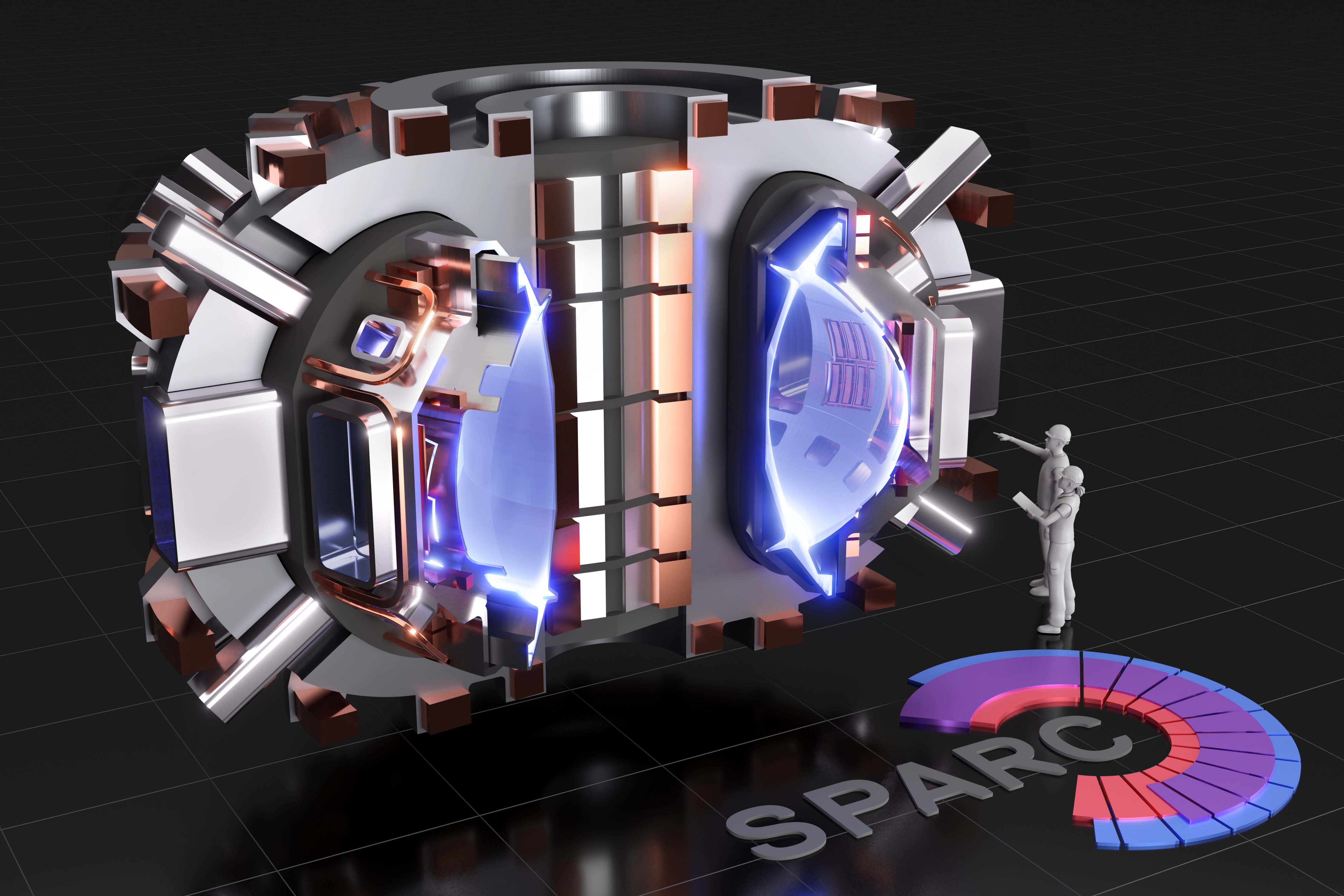

A render of the SPARC reactor, the reactor that Commonwealth Fusion intends to be the first net-energy-producing fusion reactor. The cutaway view shows the D-shaped magnets which surround the toroidal volume full of plasma. Image credit: Commonwealth Fusion

The second example of a five billion dollar MVP is nuclear fusion power. A power plant running nuclear fusion reactors could generate enormous quantities of cheap electricity with no emissions and almost no nuclear waste, more cheaply and safely than fission power and more consistently than solar or wind. In the most optimistic scenario, fusion power could be so abundant that utilities wouldn’t even bother billing based on usage, making electricity “too cheap to meter”8. Nuclear power today is generated by fission, by slamming Uranium atoms with neutrons so that they break apart and release energy. For science reasons, though, fusing two hydrogen atoms together releases far more energy than splitting a uranium atom. So a fusion power plant can hypothetically be used to generate far more energy, far more cheaply, than existing fission power plants. Unfortunately, for other science reasons, it’s also much harder to force two hydrogen atoms to hit each other hard enough to fuse than it is to hit a uranium atom with a neutron hard enough to make it split. So fusion energy has been something of a holy grail for decades, a staple of science fiction, tantalizing but unobtainable. The basic physical challenge is to create a very hot and very dense plasma of hydrogen in which atoms strike each other hard enough to fuse. This was first done in the 1950s, in the form of a laboratory demonstration and in the form of a bomb. But it takes an incredible amount of energy to create the conditions for fusion to occur, and to be useful as a power plant a fusion reactor needs to extract more energy from the plasma than it uses to maintain it. That’s never been done9.

Several dozen fusion startups have raised a total of $7.2 billion in venture capital funding as of 2024. I’m going to focus on the most well funded one, Commonwealth Fusion, which spun out of MIT’s Plasma Science and Fusion Center in 2018. In 2021 they raised a monster $1.8 billion Series B round which brought their total funding to about $2 billion. Commonwealth is building a tokamak, a type of reactor which uses magnetic fields to compress and confine the hydrogen fuel. A tokamak consists of a donut-shaped reaction chamber surrounded by large electromagnets. The plasma moves through the donut and is kept away from the walls of the reaction chamber by the magnetic field. With tokamaks, the challenge is to create more electricity than is put into the electromagnetics to generate the magnetic field. Commonwealth’s core innovation is a better magnet, which uses a new high-temperature superconductor material to generate a magnetic field 2-5x as strong as the previous state of the art which they have demonstrated. They claim that their magnet can generate that field efficiently enough that net energy generation is possible. If so, Commonwealth then needs to incorporate those magnets into a working demonstration reactor, and eventually into a reactor that can extract energy from that plasma and convert it to electricity.

We have much less certainty about the total project cost for fusion than for satellites because nobody’s built a working fusion reactor yet. But the total cost will be high. Commonwealth predicts net energy generation with their current reactor in 2026, and providing power to the grid with their next generation reactor in the early 2030s. If they manage to achieve net energy generation, it would be a spectacular achievement, but it still wouldn’t be an MVP. They might be able to reach net energy generation with their current funding ($2 billion), but they will certainly need to raise much more to build an operational reactor which actually creates value by delivering power to the electrical grid.

The hurdle here is the inherent difficulty of the engineering needed to accomplish the goal. Fusion startups are trying to build a machine of a sort which has never been successful. This is contrast with satellite internet, where the satellites themselves are mature but the scale is enormous10. Commonwealth’s reactor is about the size of a house, a large machine but not large enough that the scale drives the cost of the project. Fusion also may have some remaining scientific risk, unanswered questions about the plasma physics or nuclear physics that must be answered to build a working reactor. I don’t have the background to tell what fraction of the challenge comes from scientific issues rather than engineering issues, but a full 25% of the fusion workforce are scientists. This is very uncommon in industry, except in biotechnology and pharmaceuticals, and suggests there is still scientific risk. Venture capitalists strongly prefer to deploy capital into projects where all the scientific risk has been resolved, so this is notable. There is one last risk for fusion: even if it works, fusion might not beat solar power on cost. The cost of solar power has dropped spectacularly over the last two decades. Cost projections for fusion are very shaky, given how immature the technology is, and I haven’t seen a detailed case for why we ought to expect fusion power to be cheaper than solar power11.

Supersonic Passenger Aircraft

A Concorde taking off from London Heathrow in 2001. Note the wide, triangular wing, and the engines on the underside with square air intakes, rather than the normal circular jet engines. Image credit: David Parker / BWP Media / Getty Images

The last technology to discuss is supersonic passenger aircraft. The appeal is obvious: a plane that flies faster gets people to their destination. Existing commercial aircraft cruise at an airspeed of 540-560 mph. They can’t go faster because if an aircraft approaches the speed of sound, or Mach 1, shock waves form and the structural loads and aerodynamics of the aircraft change abruptly12. So going faster requires a new aircraft specifically designed to carry passengers at supersonic speeds. These vehicles would first be used for business travelers flying internationally, where the time savings are most significant, trans-oceanic routes limit concerns about supersonic booms, and the passengers are least sensitive to cost. If it takes 4 hours to fly from New York to London rather than 8, or 6 hours from San Francisco to Tokyo rather than 12, the world is suddenly much smaller.

A variety of military aircraft can fly supersonic, but only two civilian aircraft have ever done so: the Concorde, developed jointly by the British and French, and the Tupolev Tu-144, developed by the Soviets. Neither was a great success. The TU-144 crashed several times during development and flew passengers for only seven months before being retired from commercial service. The Concorde did much better, flying for 27 years and turning an operational profit, but it never came close to recouping its development costs. The only operators of the aircraft, Air France and British Airways, both decided to retire their Concordes in 2003. The causes of the commercial failure of the Concorde are disputed, but the most significant is the extra fuel needed to sustain supersonic speeds. Drag increases as speed increases, and there is a further spike in drag from shockwaves when an aircraft goes supersonic, so flying at that speed takes more fuel and more money. There are also regulatory constraints: The U.S. banned civilian supersonic flight over land in 1973, which along with measures by other countries restricted Concorde’s useful routes mostly to international travel across oceans13. But neither of these problems is insurmountable. The world is richer and more globalized today and there are certainly more people willing to pay what’s necessary to travel faster. A great deal of effort has been made in the past few decades to make planes quieter, both at subsonic and supersonic speeds. And governments may be open to working with industry to revise regulations to allow more supersonic flight while still preventing the worst sonic booms. Since the retirement of the Concorde, there has been persistent interest in taking another shot at supersonic passenger aircraft.

The best-funded company attempting to revive supersonic passenger aviation is Boom Supersonic, founded in 2014. They are developing an 80 seat passenger aircraft capable of cruising at Mach 1.7, about twice as fast as existing aircraft. Boom has raised ~$700m and has United Airlines as a launch customer. They have done a great deal of design work and flown a subscale prototype, but they have a long way to go before producing a working vehicle. From the outside, the biggest challenge facing Boom is developing an engine for their aircraft. Jet engines are one of the most sophisticated and difficult types of machines ever built, and there are only three major manufacturers who build them. Boom was partnered with Rolls-Royce to develop an engine, but Rolls-Royce pulled out of that partnership in 202214. As a result, Boom has been forced to develop its own engine, named Symphony. I have no idea how far they’ve gotten in development, but it’s a bad sign that Rolls-Royce decided to pull out of the program. As a consequence, development of the engine got a delayed start.

And even when Boom completes engine development and has a working prototype aircraft, in one sense they will only just be beginning, because now they have to pass muster with the FAA. Aircraft certification is an intense and time-consuming process. The FAA requires extensive testing and deep reviews of the vehicle design. I’m not familiar with the nuances of certification for passenger aircraft, but I can say broadly that the FAA cares much more about safety than it does about getting new technology to market. Certification has been a formidable hurdle for many aviation startups, particularly for manned aircraft. The cost of failure is simply much higher for an aircraft carrying passengers than it is for a communication satellite or for a nuclear fusion reactor15. All machines fail; it is impossible to make a complex technology foolproof. The consequences of failure for the vast majority of products are financial. But when people are involved, great effort must be made to minimize the risk of catastrophic failure. And that work needs to happen up front, because working out kinks after the aircraft enters service isn’t acceptable.

Development of the Concorde cost between $15 and $20 billion, adjusted for inflation and converted to dollars. That’s our only solid data on the costs of developing this sort of aircraft. If Boom succeeds, it is likely they’ll need less capital than Concorde, because the technology is not new and because private engineering programs do tend to be cheaper than comparable government efforts. I don’t feel that I’m going out on a limb to expect that costs will be more than $5 billion, and anything from $5 billion to $15 billion seems plausible to me. Like in fusion, the high capital cost of supersonic aviation comes from quantity and difficulty of the engineering work needed to get the aircraft flying. Supersonic aircraft are somewhere between the difficulty of LEO communication satellites and nuclear fusion. Unlike nuclear fusion, supersonic flight is a mature technology and there aren’t novel engineering or scientific problems that still need to be solved. But a plane is a more complicated and more difficult type of machine to get right than a satellite is. And as discussed, safety drives cost for a passenger plane in a way it does not in our other two examples.

Discussion

These three projects and the few others that require a five billion dollar MVP are compelling because they attempt to change some piece of fundamental technology. Communication, energy, and transportation are sectors which underpin everything else that happens in a technological society. Incremental change in these domains happens constantly. But significant improvement seems at this point to require a step-change in technology. It’s such a pain in the ass to build a five billion dollar MVP that no one will do it unless the project has the potential to be transformational. It sometimes feels like all the low-hanging fruit have been picked, and these projects are the few apparent opportunities to cause economic change of the sort which happened when railroads or jet air travel first became available, or of the sort that’s happening now as the energy system transitions to cheap and plentiful solar and wind power.

It’s notable that even most very difficult projects fall well below the Billion Dollar MVP level. Nuclear fusion is basically unique among new energy technologies today in requiring so much capital - as far as I can tell nothing else in the energy sector is comparable, except perhaps new types of fission reactors. Solar, wind, and batteries might have needed billions of dollars to reach their present scale, but unlike fusion, they can be subdivided into smaller viable products and built out incrementally. Most new technologies can be subdivided like this, or have a smaller version of the product which can be put into service initially. Companies make great efforts to avoid five Billion Dollar MVPs and only resort to one when there is no other option. For instance, SpaceX’s first built the small scale Falcon 1 for a total project cost of around $100 million, and only moved to its primary mid-size rocket, the Falcon 9, after successfully launching Falcon 1 and winning NASA contracts in the $100s of millions. One notable exception is Relativity Space, a company developing reusable rockets that’s raised at least $1.3 billion. They’re the only company I know of to have opted into developing a larger MVP than they strictly need to. Like SpaceX, they initially developed a small rocket, Terran 1, but shelved it after one unsuccessful launch attempt in favor of developing their mid-size rocket, Terran-R. There are good economic reasons to invest into mid-size rockets rather than small rockets, and a rocket of Terran-R’s size will cost $500 million to $2 billion to develop, not $5 billion. But it is still quite uncommon and potentially dangerous to willingly build a mid-size rocket as their first successful vehicle.

These companies are also interesting because they are doing things that have historically been the domain of governments. A state has access to vastly more resources than even the largest mega-corporation and isn’t constrained by the market or a need to make back an investment. Governments can carry the risk of a failed development project and fund things that venture capital had previously been unwilling to back. Governments routinely spend many billions per large infrastructure projects - bridges, tunnels, ports, and highways. NASA regularly builds multi-billion dollar scientific spacecraft. And the US Department of Defense is in the processing of upgrading its fleet of nuclear aircraft carriers to the Gerald Ford Class, at a cost of a cool $13 billion per ship. Private companies do invest many billions into existing business lines, but attempting new projects on this scale seems new and interesting. The first-generation supersonic passenger aircraft, the Concorde, was a joint effort by the UK and France. Development was started by a treaty between the two, and Charles de Gaulle picked the name. The most plausible second-generation aircraft is being developed by Boom, founded by a former mid-level tech executive and headquartered at a public-use airport in the suburbs of Denver. Government is the means by which society has accomplished things that are difficult, worthwhile and fundamental. It’s a big deal if industry can now do that sort of thing too.

But it remains to be seen whether this structure will really work. Many of the examples I’ve given raised capital in the era between 2018 and 2022, a period where persistently low interest rates and sustained economic stability meant that more venture capital was available than any other point in history. There was more capital available than there were good opportunities to deploy it, and as a result allocating a billion dollars to an ambitious and competent company was attractive. Commonwealth Fusion has not built a reactor, Boom has only flown a subsonic demonstrator, and Relativity space hasn’t made it to orbit. The five billion dollar MVP has all of the risks that the standard model of entrepreneurship aimed to avoid. These companies are spending a very long time in development, without the feedback and accountability that comes from exposure to the market. It is notable that the only unambiguously successful five billion dollar MVP we’ve covered is Starlink, which was built by SpaceX after they were already an established aerospace titan with a top-notch engineering organization. I don’t know if a true startup like OneWeb, Boom, or Commonwealth has ever successfully completed a five billion dollar MVP.

These are high risk projects. Many or most of them will fail. But technological change does happen, and some of them will succeed. LEO satellite internet worked. After two decades of development and many dashed expectations, Waymo today carries thousands of passengers every day around the bay area and elsewhere in self-driving cars. This is a cynical era, with broad social skepticism of the notion of “progress” and the desirability of technological change. But for better or worse, engineering is the art of the possible. These projects create new tools. Choosing how to use those tools wisely is another matter. And personally, I’d much rather live in the version of the future world with great satellite internet, plentiful fusion power, and the option to cross the Pacific in an afternoon.

-

22,250 miles ↩

-

Between about 250 miles and 750 miles. Right now, you are closer to space than Boston is to Philadelphia. ↩

-

The only exception is the polar regions. Satellites in inclined orbits can cover everything below a certain latitude, while leaving the poles uncovered. OneWeb didn’t take advantage of this, and the entire constellation was in polar orbits, but SpaceX did, and built the constellation in three stages. The stages respectively covered up to ~53 deg latitude (Middle of Canada, just north of London), ~70 deg latitude (just above the arctic circle), and a final group of satellites to cover the poles. ↩

-

Johnathan Mcdowell, an astronomer at Harvard, publishes excellent statistics tracking Starlink satellites and other megaconstellations, updated for each launch: https://planet4589.org/space/con/conlist.html ↩

-

I left OneWeb in June of 2019, 9 months before the bankruptcy. I still count it as an unambiguous failure, despite the second act. OneWeb should be thought of as two different companies, one pre and one post bankruptcy. By wiping out the equity of the original investors but retaining most of the progress made, the bankruptcy effectively gave the new owners a $3.4 billion discount on the project. Additionally, half the capital to buy OneWeb out of bankruptcy came from the British government. Their involvement was unusual and was a product both of a spectacularly successful lobbying effort by OneWeb and of a particular historical moment immediately after Brexit when the UK had just exited many joint EU space projects and was interested in securing a large satellite program which could anchor the British aerospace sector. The post-bankruptcy company has been fairly successful in making use of the assets they acquired, but the pre-bankruptcy company is the one that matters for us. ↩

-

Just like OneWeb, after the bankruptcy wiped out the original investors, both companies were purchased out of bankruptcy and have operated profitably since. ↩

-

Adjusted for inflation, these two would be roughly $7-10 billion, though estimating total cost and adjusting for inflation is tricky for large, long-term development projects. ↩

-

This phrase was first used to describe the potential of abundant nuclear energy in 1954. It didn’t work out that way for fission power plants, but hope springs eternal. ↩

-

Except for those bombs, of course, which do create more energy than they consume. But they are a terrible way to generate electricity. ↩

-

Satellite internet certainly required an enormous amount of new engineering work, and there are some technologies involved, particularly electronically-steerable phased array antennas. But on the whole, satellites are a mature technology. ↩

-

I’m actually pretty worried about this, but haven’t done the research to say anything more definitive. Solar panels are mass-produced in factories while reactors are built on-site in low quantities. The clearest lesson of industrial economics is that anything built in large quantities in factories will eventually get extremely cheap (televisions, cars, household goods, etc.) while things built in low quantities or on site will remain stubbornly expensive (houses, ships, bridges, etc.). The fusion reaction may generate an incredible amount of energy per gram of hydrogen fuel, but the final operating cost of a fusion power plant depends on the cost and complexity of the reactor. We are largely guessing at what will be required to run a full-scale plant. So even if fusion works, it might still be cheaper to manufacture solar panels and put them in a field rather than building and operating a reactor. My impression is that the nascent fusion industry isn’t taking this risk seriously enough. ↩

-

Mach 1 is 663 mph at a typical cruising altitude of 35,000 ft. The effective speed limit is actually less than Mach 1 because as an aircraft gets close to Mach 1 the airflow accelerates as it flows around the aircraft body and will go supersonic at particular points, even if the aircraft’s overall speed is subsonic. This is known as transonic flight. ↩

-

The dispute I alluded to is mostly about which of these factors, cost or regulation, was most responsible for the decision of the airlines to take Concorde out of service. Concorde is sometimes cited as an example of government regulation killing perfectly good technology, similarly to how people sometimes talk about nuclear power. Personally, I agree that the early regulation of supersonic flight was hamfisted and excessive, but I think the high cost of flying supersonic was the main problem. ↩

-

The same Rolls-Royce as the car brand, though the automotive business and the aviation business split into separate companies in 1973. Like many automobile companies in the early 1900s, their core skill set was engine design and they applied it to both cars and planes. ↩

-

Nuclear fusion is inherently safer than fission. Uranium is much more radioactive than the small quantities of short-lived hydrogen isotopes needed for fusion or the few reaction byproducts. So accidental release of radioactive material is less likely and less harmful. And a fusion reactor cannot “meltdown” in the way an uncontrolled fission chain-reaction can. It is very difficult to sustain the conditions for fusion to take place, and if plasma containment is lost the plasma rapidly cools and energy generation ceases. The hot plasma may damage the reactor but it cannot explode. ↩